Deploying to Amazon Managed Workflows for Apache Airflow with CI/CD tools

January 30, 2024Apache Airflow‘s active open source community, familiar Python development as directed acyclic graph (DAG) workflows, and extensive library of pre-built integrations have helped it become a leading tool for data scientists and engineers for creating data pipelines.

Amazon Managed Workflows for Apache Airflow (Amazon MWAA) is a fully managed service that makes running open source versions of Apache Airflow on AWS and building workflows to launch extract-transform-load (ETL) jobs and data pipelines easier.

To run directed acyclic graphs (DAGs) on an Amazon MWAA environment, copy files to the Amazon Simple Storage Service (Amazon S3) storage bucket attached to your environment, then let Amazon MWAA know where your DAGs and supporting files are located as a part of Amazon MWAA environment setup. Amazon MWAA takes care of synchronizing the DAGs among workers, schedulers, and the web server.

When working with Apache Airflow in MWAA, you would either create or update the DAG files by modifying its tasks, operators, or the dependencies, or change the supporting files (plugins, requirements) based on your workflow needs. Although both DAGs and supporting files are stored in Amazon S3 and referenced by the Amazon MWAA environment, MWAA updates these differently to your environment.

DAG files

Amazon MWAA automatically detects and syncs changes from your Amazon S3 bucket to Apache Airflow every 30 seconds. Changes made to Airflow DAGs as stored in the Amazon S3 bucket should be reflected automatically in Apache Airflow.

Supporting files

Updates to the Amazon S3 bucket for supporting files (requirements.txt and plugin.zip) require updating your environment to reload the changes. They are not automatically reloaded.

You can do this using the aws mwaa update-environment --name <value> --plugins-s3-object-version <value> --plugins-s3-path <value> or aws mwaa update-environment --name <value> --requirements-s3-object-version <value> --requirements-s3-path <value> commands for the plugin.zip and requirements.txt file, respectively. You also can use the AWS Management Console to edit an existing Airflow environment, and then select the appropriate versions to change for plugins and requirements files in the DAG code in Amazon S3 section.

Once the environment is set, you must wait for the environment status to be Available for changes to be reflected in the Apache Airflow environment.

Although you can manually create and update DAG files using the Amazon S3 console or using the AWS Command Line Interface (AWS CLI), most organizations use a continuous integration and continuous delivery process to release code to their environments.

Continuous integration (CI) is a DevOps software development practice in which developers regularly merge code changes into a central repository, after which automated builds and tests are run. Continuous integration most often refers to the build or integration stage of the software release process and entails both an automation component (for example, a CI or build service) and a cultural component (for example, learning to integrate frequently). Key goals of continuous integration are to find and address bugs faster, improve software quality, and reduce the time it takes to validate and release new software updates.

Continuous delivery (CD) is a software development practice in which code changes are automatically prepared for a release to production. A pillar of modern application development, continuous delivery expands upon continuous integration by deploying all code changes to a testing environment and/or a production environment after the build stage. When CD is properly implemented, developers have a deployment-ready build artifact that has passed through a standardized test process.

For the development lifecycle, we want to simplify the process of moving workflows from developers to Amazon MWAA. Also, we want to minimize manual steps needed, such as manually copying files to Amazon S3 either via the console or command line. We also want to ensure that the workflows (Python code) are checked into source control.

In this post, we explain how to use the following popular code-hosting platforms—along with their native pipelines or an automation server—to allow development teams to do CI/CD for their MWAA DAGs, thereby enabling easier version control and collaboration:

- GitHub with GitHub Actions

- AWS CodeCommit with AWS CodePipeline

- Bitbucket with Bitbucket Pipelines

- Jenkins

- Other CI/CD tools:

- CircleCI

- GitLab CI

- TeamCity

- Bamboo

We will set up a simple workflow that takes every commit we do in our source code repository, and then syncs that code to the target DAGs folder, where MWAA will pick it up. Specifically, we will:

- Set up/reuse an existing source code repository, which acts as the single source of truth for Airflow development teams facilitating collaboration and accelerating release velocity.

- Create a workflow pipeline that uses native support available within the tool’s ecosystem to detect changes (creates/updates) in the source code repository, and then synchronize them to the final destination (the S3 Airflow bucket defined for your Amazon MWAA environment).

Although we don’t include validation, testing, or other steps as a part of the pipeline, you can extend it to meet your organization’s CI/CD practices.

Note that this process would sync both your DAGs and supporting files from the source code repository to your Amazon S3 bucket. Although changes to DAGs would automatically reflect in your Amazon MWAA environment, changes made to supporting files require an additional step, as updates to these necessitate an Amazon MWAA environment update.

Prerequisites

Before starting, create an Amazon MWAA environment (if you don’t have one already). If this is your first time using Amazon MWAA, refer to Introducing Amazon Managed Workflows for Apache Airflow (MWAA).

Use with GitHub and GitHub Actions

GitHub offers the distributed version control and source code management (SCM) functionality of Git, plus its own features.

Within GitHub, GitHub Actions uses a concept of a workflow to determine what jobs and steps within those jobs to run. To set this up, first we must create a new directory in our repository that GitHub Actions will watch to know which steps to launch.

Prerequisites

- Access to a GitHub repository

- Ability to configure your GitHub Actions workflows with OpenID Connect to request short-lived accessed token from AWS without using hard-coded long-lived secrets. To set up GitHub as an OpenID Connect (OIDC) identity provider (IdP) to AWS, follow the steps outlined in Configuring OpenID Connect in Amazon Web Services. You will end setting up an AWS Identity and Access Management (IAM) role and trust policy in your AWS account. Your GitHub actions workflow during execution will then receive a JWT from the GitHub OIDC provider, and then request an access token from AWS using the role.

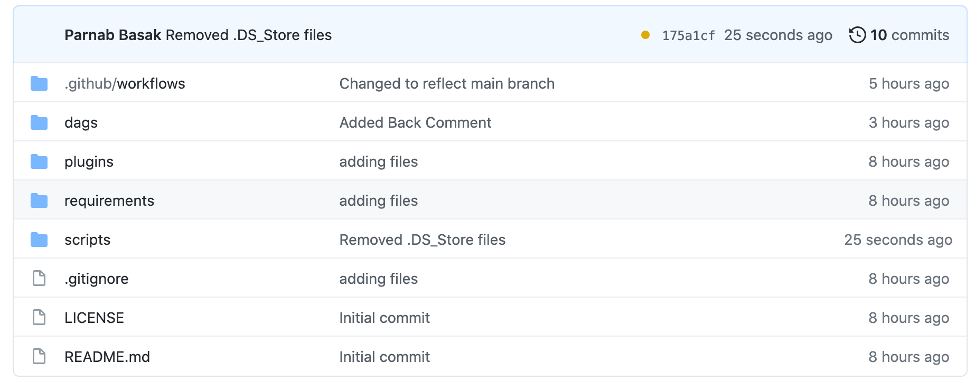

Step 1: Push Apache Airflow source files to your GitHub repository

Configure your GitHub repository to contain the requisite folders and files that would need to sync up with your Amazon MWAA S3 bucket. In the following example, I have configured the subfolders within my main repository:

- dags/ for my Apache Airflow DAGs

- plugins/ for all of my plugin .zip files

- requirements/ for my requirements.txt files

Step 2: Create GitHub Actions workflow

Create a .github/workflows/ folder to store the GitHub S3 Sync Action file.

Create a .yml file in the .github/workflows/ sub folder with the following contents:

Note: Workflows in GitHub Actions uses files in YAML syntax, and must have either a .yml or .yaml file extension. You must store workflow files in the .github/workflows directory of your repository.

The aws s3 sync uses the vanilla AWS CLI command to sync a directory (either from your repository, or generated during your workflow) with a remote Amazon S3 bucket. You can update this command with additional parameters to meet the security needs for your organization.

The following example includes optimal defaults for the Amazon S3 action:

--follow-symlinkswon’t hurt and fixes some symbolic link problems that may appear.--deletepermanently deletes files in the S3 bucket that are not present in the latest version of your repository/build.

Optional

If you’re uploading the root of your repository, adding --exclude '.git/*' prevents your .git folder from syncing, which would expose source code history if your project is closed-source. To exclude more than one pattern, you must have one --exclude flag per exclusion. The single quotes are also important.

Verification

Verify your GitHub Actions menu for the new Workflow.

Based on the on attribute (push to main branch, in this example), perform an action in GitHub and verify whether the workflow job has been triggered. The deploy job shows up in green status.

On success, the workflow deploy job should look like the following:

Verify the detailed steps of the process by clicking on the deploy job.

Step 3: Verify sync to the S3 bucket and Airflow UI

Verify that the latest files and changes have been synced to your target Amazon S3 bucket configured for Amazon MWAA.

Verify that the latest DAG changes were picked up by navigating to the Airflow UI for your Amazon MWAA environment.

Optional: Deleting the CI/CD setup

If you are referring to this article just to understand how this works, and you no longer need the CI/CD resources, then you can clean up the resources when you are done. Follow the process as outlined in GitHub documentation to delete the repository. Keep in mind this is an irreversible process as it will delete the repository and all its associated workflows. Users will no longer be able to connect to the repository, but they still will have access to their local repositories.

Use with AWS CodeCommit and AWS CodePipeline

AWS CodeCommit is a fully managed source control service that hosts secure Git-based repositories. CodeCommit makes collaborating on code in a secure and highly scalable ecosystem easier for teams.

AWS CodePipeline is a fully managed continuous delivery service that helps automate release pipelines for fast and reliable application and infrastructure updates.

You can configure a CodeCommit repository, which acts as a Git-based source control system, without worrying about scaling its infrastructure, along with CodePipeline, which automates the release process when there is a code change. This can allow you to deliver features and updates rapidly and reliably.

You can use the AWS Management Console or an AWS CloudFormation, which provides a way to model a collection of related AWS and third-party resources, provision them quickly and consistently, and manage them throughout their lifecycles, by treating infrastructure as code.

Set up using AWS Management Console

Prerequisites

- A CodeCommit repository. To create a CodeCommit repository:

- Open the CodeCommit console.

- Choose the AWS Region in which you want to create the repository and pipeline. For more information, refer to the AWS service endpoints documentation.

- On the Repositories page, choose Create repository.

- For Repository name, enter a name for your repository (for example, mwaa-code-repo).

- Choose Create.

- Source files for your Airflow project. In the following example, I have configured the subfolders within my main repository:

- dags/ for my Apache Airflow DAGs.

- plugins/ for all of my plugin .zip files.

- requirements/ for my requirements.txt files.

Step 1: Push Apache Airflow source files to your CodeCommit repository

You can use Git or the CodeCommit console to upload your files. To use the Git command-line from a cloned repository on your local computer:

Set the default branch name. To set main as the default branch name:

To stage all of the files at once:

To commit the files with a commit message:

To push the files from a local repo to the CodeCommit repository:

To use the CodeCommit console to upload files:

- Open the CodeCommit console and choose your repository from the Repositories list.

- Choose Add file, Upload file.

- Select Choose file, and then browse for your file. Commit the change by entering your user name and email address.

- Choose Commit changes.

- Repeat this step for each file you want to upload.

Step 2: Create your pipeline

In this section, you create a pipeline with the following actions:

- A source stage with a CodeCommit action in which the source artifacts are the files for your Airflow workflows.

- A deployment stage with an Amazon S3 deployment action.

To create a pipeline with the wizard:

Sign in to the AWS Management Console and open the CodePipeline console.

On the Welcome, Getting started, in the Pipelines page, choose Create pipeline.

In Choose pipeline settings, enter codecommit-mwaa-pipeline for Pipeline name.

For Service role, choose New service role to allow CodePipeline to create a service role in AWS Identity and Access Management (IAM).

Leave the settings under Advanced settings at their defaults, and then choose Next.

In Add source stage, choose AWS CodeCommit for Source provider.

For Repository name, choose the name of the CodeCommit repository you created in Step 1: Push Apache Airflow source files to your CodeCommit repository.

In Branch name, choose the name of the branch that contains your latest code update. Unless you created a different branch on your own, only main is available.

After you select the repository name and branch, the Amazon CloudWatch Events rule to be created for this pipeline is displayed.

Choose Next.

In Add build stage, choose Skip build stage, and then accept the warning message by choosing Skip again.

Choose Next.

In Add deploy stage, choose Amazon S3 for Deploy provider.

Enter the name of your private bucket for the Bucket.

Select Extract file before deploy.

Note: The deployment fails if you do not select Extract file before deploy. This is because the AWS CodeCommit action in your pipeline zips source artifacts and your file is a .zip file.

When Extract file before deploy is selected, Deployment path is displayed. Enter the name of the path you want to use. This creates a folder structure in Amazon S3 to which the files are extracted. For this tutorial, leave this field blank.

Choose Next.

In the Review step, review the information, and then choose Create pipeline.

After your pipeline runs successfully, open the Amazon S3 console and verify that your files appear in your public bucket.

Verify that the latest DAG changes are reflected in the workflow by navigating to the Airflow UI for your MWAA environment.

Optional: Deleting the CI/CD setup

If you are referring to this article just to understand how this works, and you no longer need the CI/CD resources, then you can clean up the resources when you are done. Keep in mind this is an irreversible process as it will destroy all resources, including the CodeCommit repository, so make backups of anything you want to keep. Users will no longer be able to connect to the repository in AWS CodeCommit, but they still will have access to their local repositories.

Using the console

Sign in to the AWS Management Console and open the CodePipeline console. Delete the CodePipeline pipeline created in Step 2: Create your pipeline by selecting the pipeline name and then the Delete pipeline button. To confirm deletion, type delete in the field and then select Delete.

Open the CodePipeline console. Delete the CodePipeline pipeline created in Step 1: Create your repository by selecting the repository name and then the Delete pipeline button. To confirm deletion, type delete in the field and then select Delete.

Using the AWS CLI

In the terminal, run the following to delete the resources created by the manual steps:

aws codepipeline delete-pipeline --namecodecommit-mwaa-pipeline(wherecodecommit-mwaa-pipelineis the name of the pipeline created in Step 2: Create your pipeline). This command returns nothing.aws codecommit delete-repository --repository-namemwaa-code-repo(wheremwaa-code-repois the name of the repository created in Step 1: Create your repository). If successful, the ID of the CodeCommit repository that was permanently deleted appears in the output:

Set up using AWS CloudFormation

Prerequisites

- Source artifacts for your Airflow project. In the following example, I have configured the subfolders:

- dags/ for my Apache Airflow DAGs.

- plugins/ for all of my plugin .zip files.

- requirements/ for my requirements.txt files.

- An IAM role that has access to run AWS CloudFormation and to use CodeCommit and CodePipeline.

Step 1: Zip the artifacts and upload them to the Amazon S3 bucket configured for MWAA

When created via CloudFormation, AWS CodeCommit requires information about the Amazon S3 bucket that contains a .zip file of code to be committed to the repository. Changes to this property are ignored after initial resource creation.

Create a zip file containing the Airflow artifacts (dags, plugins, requirements) and name it Artifacts.zip. The .zip file should look something like this:

Upload the Artifacts.zip file to the root of the S3 bucket configured for MWAA.

CloudFormation would upload and commit the contents of the .zip file, including the folder structure, to CodeCommit after creating it.

Step 2: Run AWS CloudFormation to AWS CodeCommit Repository and CodePipeline

In this section, create a pipeline with the following actions:

- A CodeCommit repository to host the Airflow artifacts.

- A CodePipeline pipeline having a source stage with a CodeCommit action, where the source artifacts are the files for your Airflow workflows. The pipeline would also create a new S3 bucket to store the build/deployment artifacts.

- A deployment stage with an Amazon S3 deployment action.

Download the required CloudFormation template, AMAZON_MWAA_CICD_Pipeline.yaml, which declares the AWS resources that make up a stack.

Sign in to the AWS Management Console and open the CloudFormation console.

Create a new stack by using one of the following options:

- Choose Create Stack. This is the only option if you have a currently running stack.

- Choose Create Stack on the Stacks page. This option is visible only if you have no running stacks.

On the Specify template page, select Template is ready.

For Specify template, select and upload the CloudFormation template (AMAZON_MWAA_CICD_Pipeline.yaml) that you saved on your local computer in our previous step.

To accept your settings, choose Next, and proceed with specifying the stack name and parameters.

After selecting a stack template, specify the stack name and values for the parameters that were defined in the template.

On the Specify stack details page, type a stack name in the Stack name box.

In the Parameters section, specify parameters that are defined in the stack template.

- CodeCommitRepoName: Provide a meaningful name to your source CodeCommit repository.

- CodePipelineName: Provide a meaningful name to your source CodeCodePipeline pipeline.

- MWAAs3BucketName: Provide the S3 bucket name that has been configured for your MWAA environment.

When you are satisfied with the parameter values, choose Next to proceed with setting options for your stack.

After specifying parameters that are defined in the template, you can set additional options for your stack. When you have entered all your stack options, choose Next Step to proceed with reviewing your stack.

On the Review page, review the details of your stack.

If you need to change any of the values before launching the stack, choose Edit on the appropriate section to go back to the page that has the setting that you want to change.

Check the box that says I acknowledge that AWS CloudFormation might create IAM resources.

Choose Create stack to launch the stack.

After the stack has been successfully created, its status changes to CREATE_COMPLETE. You can then choose the Outputs tab to view your stack’s outputs if you have defined any in the template.

You also can create the same stack by running the aws cloudformation create-stack command:

Replace the values mwaa-cicd-stack, mwaa-code-repo, mwaa-codecommit-pipeline, and mwaa-code-commit-bucket with your own environment-specific values.

Navigate to the CloudFormation console and wait for the stack to be in CREATE_COMPLETE state.

Step 3: Verify the newly created CodeCommit repository by making a change to a DAG file

Git commit a new file or push a change to an existing file to the newly created CodeCommit repository.

Wait for the CodePipeline pipeline to complete.

Verify that the change has been synced to the Amazon S3 bucket configured for Amazon MWAA.

Verify the latest DAG changes has been reflected in your workflow by navigating to the Airflow UI for your MWAA environment.

Step 4: Remove the Artifacts.zip folder from the S3 bucket configured for MWAA

Permanently delete the Artifacts.zip uploaded in Step 1.

Optional: Deleting the CI/CD setup

If you are referring to this article just to understand how this works, and you no longer need the CI/CD resources, then you can clean up the resources when you are done. Keep in mind this is an irreversible process as it will destroy all resources, including the CodeCommit repository, so make backups of anything you want to keep. Users will no longer be able to connect to the repository in AWS CodeCommit, but they still will have access to their local repositories.

From the AWS CLI:

This command will not generate any output.

Open the CloudFormation console and select the stack-name that you were trying to delete. Navigate to the Events section. The stack is in DELETE_FAILED state as it was unable to delete the Amazon S3 bucket that was being used as the artifact store for the pipeline because it was not empty.

Navigate to the S3 console, then empty and then delete the S3 bucket used by the created pipeline as the artifact store.

After you have done that, run the command again and it should remove the environment stack from your CloudFormation console.

Use with Bitbucket and Bitbucket Pipelines

If you are using BitBucket, you can sync the contents of your repository to Amazon S3 using the aws-s3-deploy pipe using BitBucket Pipelines.

Prerequisites

- Access to a BitBucket repository

- A pair of AWS user credentials (AWS access key ID and AWS secret access key) that has appropriate permissions to update the Amazon S3 Bucket configured for your MWAA environment

Step 1: Push Apache Airflow source files to your BitBucket repository

You can use Git or the BitBucket console to upload your files.

To use the Git command-line from a cloned repository on your local computer, run the following command to stage all of your files at once:

Commit the files with a commit message:

Push the files from your local repo to your CodeCommit repository:

Step 2: Create your BitBucket Pipeline

Create a BitBucket Pipeline .yml file (bitbucket-pipelines.yml, in this example) in the root of your repository with the contents as follows:

Change the S3_BUCKET name to match the MWAA bucket name for your environment.

Change the AWS Region to match the Region where the Amazon MWAA bucket exists for your environment.

Ensure that you have the requisite variables defined in your BitBucket repository to be used as environment variables in the build container.

Upload your local BitBucket Pipeline .yml to BitBucket.

Step 3: Verify that the Pipeline ran successfully

In your BitBucket Pipeline, verify that the Pipeline ran successfully.

In your target Amazon S3 bucket, verify that all the files have been copied successfully.

By navigating to the Airflow UI for your MWAA environment, verify that the latest DAG changes have been picked up.

Optional: Deleting the CI/CD setup

If you are referring to this article just to understand how this works, and you no longer need the CI/CD resources, then you can clean up the resources when you are done. Follow the process as outlined in GitHub documentation to delete the repository. Keep in mind this is an irreversible process as it will delete the repository and all its associated pipelines. Users will no longer be able to connect to the repository, but they still will have access to their local repositories.

Use with Jenkins

If you are using Jenkins as your build server, you can easily and automatically upload your builds from Jenkins to Amazon S3. After doing a one-time configuration on your Jenkins server, syncing builds to S3 is as easy as running a build; running anything additional is not needed.

Prerequisites

- A Jenkins server running on an Amazon Elastic Compute Cloud (Amazon EC2) instance in your account.

- A VPC endpoint to your Amazon S3 bucket configured for MWAA in the VPC where your Amazon EC2 instance is running.

- An Amazon EC2 IAM role that has access to your Amazon S3 bucket configured for MWAA.

- If you are running Jenkins on an on-premises instance, you also need a pair of AWS user credentials (AWS access key ID and AWS secret access key) that has appropriate permissions to update your S3 bucket configured for your MWAA environment.

Step 1: Install Amazon S3 plugin in Jenkins

Navigate to Dashboard, Manage Jenkins, Manage Plugins and select the Available tab. Find the S3 publisher plugin and install it.

Navigate to Manage Jenkins and select Configure system. Find Amazon S3 profiles and then Add.

Provide a profile name, access key, and secret access key for your AWS account or an IAM role.

Note: If you are running your Jenkins server on an Amazon EC2 instance, then use IAM role. If you are running the Jenkins server on an on-premises server, provide access key and secret key.

Select Add, Save.

Step 2: Configure a Jenkins job

Navigate to the Jenkins job and find Post build actions. Add a new Post build action and select Publish artifacts to S3 Bucket.

Select your existing S3 profile and define the files to upload.

Select Save. Now each time you run a successful build, the artifacts will automatically upload to your Amazon S3 bucket.

Select Build Now.

Step 3: Verify the upload

Navigate to your Amazon S3 bucket and verify the upload. Verify that the latest DAG changes have been picked up by navigating to the Airflow UI for your MWAA environment.

Optional: Deleting the CI/CD setup

If you are just using this article to understand how this works, and you no longer need the build specifications, then you can clean them up when you are done. Disable/delete the project from the Jenkins console.

Use with other CI/CD tools

The idea is to configure your continuous integration process to sync Airflow artifacts from your source control system to the desired Amazon S3 bucket configured for MWAA. Because several CI/CD tools are available, let’s walk through a high-level overview, with links to more in-depth documentation.

CircleCI: Enable Pipelines, add the orbs stanza below your version, invoking the orb. Then configure the Amazon S3 orb that allows you to sync directories or copy files to an S3 bucket. Refer to the documentation to learn more.

GitLab CI: Specify in your .gitlab-ci.yml a job with the Amazon S3 copy or sync command using a Docker image preinstalled with Python. Refer to the documentation to learn more.

TeamCity: Use the teamcity-s3-artifact-storage-plugin, as explained in the documentation to publish artifacts to Amazon S3.

Bamboo: Add a script task to the last step of your plan that would copy or sync artifacts to Amazon S3 as explained in the documentation.

Conclusion

In this article, we explained how to extend existing CI/CD processes and tools to deploy code to Amazon MWAA. Having Airflow code and configurations managed via a central repository should help development teams conform to standard processes when creating and supporting multiple workflow applications and when performing change management. With native support available within the tools, automated, faster quality releases can deploy code to Amazon MWAA, regardless of which Airflow version you choose to deploy to.