Integrating the AWS Lambda Telemetry API with Prometheus and OpenSearch

January 24, 2024The AWS Lambda service automatically captures telemetry, such as metrics, logs, and traces, and sends it to Amazon CloudWatch and AWS X-Ray. There’s nothing you need to do for it to happen, this is available out-of-the-box. However, if your enterprise has adopted an open source observability solution like Prometheus or OpenSearch, you can use the AWS Lambda Telemetry API to stream telemetry to the open source tool of your choice directly from the AWS Lambda service.

This blog covers the details of how the AWS Lambda Telemetry API works and how to integrate it with open source observability and telemetry solutions such as Prometheus and OpenSearch.

Overview

Observability is one of the critical traits of any modern application. Observability helps you to gain insights necessary to understand what’s happening in the application environment and how to improve its performance. The AWS Cloud Adoption Framework defines monitoring, tracing, and logging as three pillars of observability.

AWS provides out-of-the-box solutions for addressing observability requirements when building serverless applications using the AWS Lambda service.

- AWS Lambda service sends function logs to Amazon CloudWatch Logs. Those logs include both system logs generated by the AWS Lambda service (also known as platform logs), and application logs produced by your functions (also known as function logs). You can use additional Amazon CloudWatch capabilities such as Logs Insights to query the logs or Live Tail to debug your functions.

- AWS Lambda service emits operational and performance metrics to Amazon CloudWatch Metrics, which you can use to build operational and business dashboards. You can build widgets visualizing these metrics and set alarms to respond to changes in utilization or error rates.

- When you enable Active Tracing for your Lambda functions, or when a Lambda function is invoked by a service or application that also has AWS X-Ray tracing enabled, the AWS Lambda service will start collecting initialization and invocation traces and sending them to the AWS X-Ray service to visualize, identify bottlenecks, and debug suspicious behaviors.

AWS Lambda service provides these capabilities out-of-the-box through native integrations with Amazon CloudWatch and AWS X-Ray, making it easy for application builders to meet their observability needs without any extra instrumentation or code changes. However, some customers choose to implement their own centralized enterprise observability platform using open source alternatives such as Prometheus or OpenSearch. The AWS Lambda Telemetry API enables you to use these open source tools to receive fine-grained, per-invoke telemetry data directly from the AWS Lambda service.

Understanding the AWS Lambda Telemetry API

When using AWS Lambda Telemetry API, your extensions receive telemetry data directly from the AWS Lambda service. During function initialization and invocation, AWS Lambda automatically captures telemetry, such as logs, platform metrics, and platform traces. Extensions subscribed to the Telemetry API get this telemetry data directly from the AWS Lambda service in near real time.

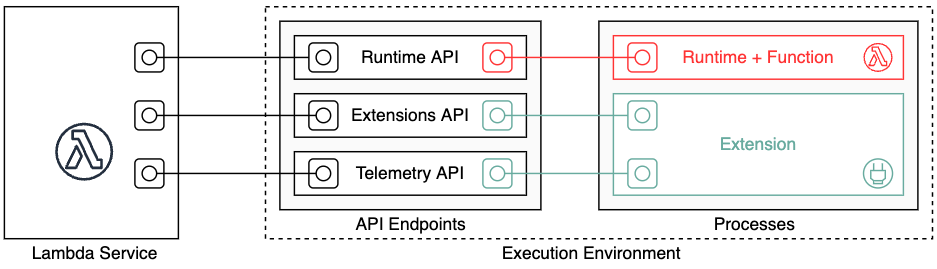

The following diagram illustrates the core components of the Extensions API and the Telemetry API. You use Extensions APIs to register your extension with the AWS Lambda service and subscribe to telemetry streams. You also use the Telemetry API to receive the telemetry data in your extension. You can read more about building extensions that use the Telemetry API in the announcement blog, and AWS Lambda service documentation.

There are three types of telemetry streams your extensions can subscribe to

- Platform telemetry – these are logs, traces, and metrics produced by the AWS Lambda service. This type of telemetry helps you to get insights about execution environment lifecycle, extension lifecycle, and function invocations

- Function logs – these are custom logs produced by the function code, such as console.log() output

- Extension logs – similar to function logs, but produced by extensions

Once your extension subscribes to the Telemetry API, as described in the documentation, it starts receiving the telemetry events stream from the AWS Lambda service.

Telemetry API event schema

In order to build the integration with Prometheus and OpenSearch, you need to understand the event schema emitted by the Telemetry API. Here is a sample stream of events that denotes a single Lambda function invocation:

- The telemetry stream starts with an event of type platform.start. This event type is emitted upon function invocation start. It contains timestamps, request ID, function version, and the tracing segment.

- The next event in the sequence is platform.runtimeDone. This event type is emitted when the runtime has completed function invocation. This event will have additional information about invocation status, such as success or failure, and metrics, such as invocation duration and produced bytes.

- The last event has a platform.report type. This event is emitted once invocation has completed, and it provides invocation metrics such as invocation duration, initialization duration, memory usage.

You can see the full list of events and event schemas in the Telemetry API docs.

Dispatching telemetry to Prometheus

Prometheus uses a polling model for retrieving metrics, where it pulls metrics from a service at a regular interval. This works well for long running processes, but it’s not a good fit for the ephemeral nature of Lambda functions. To address this, Prometheus provides a PushGateway component for jobs that are not semantically related to a specific machine or job instance. The Lambda function extension can push metrics through the PushGateway to be picked up by Prometheus. This is illustrated in this diagram:

Prometheus supports multiple types of metrics, such as Counters, Gauges, Summaries, and Histograms. When the extension receives a telemetry event, your extension code can parse the event and push the corresponding metric to the PushGateway. At this stage, the extension also adds dimensions to the metrics, and applies labels, such as function name.

Your extension code can collect the results of an individual function invocation from the platform.report event and send it to the PushGateway using the Prometheus Client Library. This sample code records each metric as observations of a Summary metric. Each observation has a set of labels that are used to indicate where the metric was observed, such as the request ID, the function name, and an UUID that was generated when the Lambda execution environment was initialized.

Once Prometheus ingests the metrics from PushGateway, you can visualize them using Grafana:

Dispatching telemetry to OpenSearch

OpenSearch can receive events from a Lambda extension and store them in one or more indices. Each index has a mapping which defines the format of the events in that index, including the JSON structure of the document that makes up the event.

Your Lambda extension code can push telemetry to OpenSearch by either directly using the OpenSearch API, or using an OpenSearch client library. By using the bulk operations supported by the OpenSearch API, the Lambda extension can batch telemetry events together and send them to OpenSearch in a single request.

Since the data contained in the telemetry events differs based on the event type, you can use dynamic mapping for the “record” object. For example, the following operation creates an OpenSearch index with “record” as a dynamic object:

Now you can send a batch of telemetry events to OpenSearch, as demonstrated in this sample code snippet:

After sending the telemetry events to OpenSearch, you can use the OpenSearch Dashboard to query and visualize results.

Sample implementations

Use the following sample extension implementations to get started with forwarding AWS Lambda telemetry data to your open source solutions, such as Prometheus and OpenSearch. Follow the steps in README for setup instructions.

- Integrating the AWS Lambda Telemetry API with Prometheus

- Integrating the AWS Lambda Telemetry API with OpenSearch

Extensions using the Telemetry API, like other extensions, share the same billing model as Lambda functions. When using Lambda functions with extensions, you pay for requests served, and the combined compute time used to run your code and all extensions, in 1-ms increments. When sending telemetry from your extensions, you might be subject to data transfer costs. To learn more about the billing for extensions, visit the AWS Lambda pricing page.

Security best practices

Extensions run within the same execution environment as the function, so they have the same level of access to resources such as file system, networking, and environment variables. AWS Identity and Access Management (IAM) permissions assigned to the function are shared with extensions. AWS guidance is to assign the least required privileges to your functions.

Always install extensions from a trusted source only. Use Infrastructure as Code (IaC) tools, such as AWS CloudFormation, to simplify the task of attaching the same extension configuration, including IAM permissions, to multiple functions. Additionally, IaC tools allow you to have an audit record of extensions and versions you’ve used previously.

When building extensions, do not log sensitive data. Sanitize payloads and metadata before logging or persisting them for audit purposes.

Conclusion

The AWS Lambda Telemetry API enables Lambda extensions to receive logs, platform traces, and invocation-level metrics directly from the AWS Lambda service. Using this capability developers and operators can collect telemetry from the AWS Lambda service and dispatch it to open source monitoring and observability tools they are already familiar with, such as Prometheus and OpenSearch.

Useful links

- AWS Lambda Extension API docs

- AWS Lambda Telemetry API docs

- Lambda Extensions Deep-Dive video series on youtube.com

- AWS Lambda Extensions github.com samples

- AWS Lambda Telemetry API Extensions samples (Node.js, Python, Golang)

- Amazon Managed Service for Prometheus

- Amazon OpenSearch Service